Deep learning architecture pertains to the design and arrangement of neural networks, enabling machines to learn from data and make intelligent decisions. Inspired by the structure of the human brain, these architectures comprise many layers of nodes connected to one another to gain increasing abstraction. As data goes through these layers, the network learns to recognize patterns, extract features, and perform tasks such as classification, prediction, or generation. Deep learning architectures have brought about a paradigm shift in the fields of image recognition, natural language processing, and autonomous systems, empowering computers with a degree of precision and adaptability to interpret inputs brought forth by human intelligence.

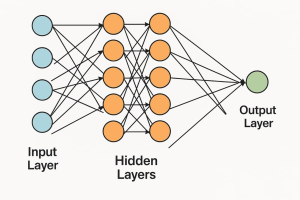

Deep Learning Architecture Diagram:

Diagram Explanation:

This illustration describes a feedforward network, a simple deep learning model wherein data travels from input to output in one direction only. It begins with an input layer, where, for example, every node would be a feature, fully connecting with nodes in the next hidden layer. The hidden layers (two layers of five nodes each) now transform the data with weights and activation functions, while every node in one layer connects with every node in the other layer: this complexity aids the network in learning complicated patterns. The output layer produces the final prediction-fully connected with the last hidden layer, it uses sigmoid in case of binary classification or softmax in case of multi-class. The arrows represent weights, which get adjusted during training to minimize the cost function.

Types of Deep Learning Architecture:

- Feedforward Neural Networks (FNNs)

The simplest cases of neural networks used for classification and regression with a unidirectional flow of data from input to output form the basis for more complicated architectures

- Convolutional Neural Networks (CNNs)

CNNs process image data by applying convolutional layers to detect spatial features. They are widely used in image classification, object detection, and medical image analysis because they can capture local patterns.

- Recurrent Neural Networks (RNNs)

RNNs are ideal for working with sequential data such as time series or text data. The loops hold in memory information or state of previous computations, which prove useful in speech recognition and language modeling.

- Long Short-Term Memory Networks (LSTMs)

LSTMs, which in turn are a type of RNN, can learn long-term dependencies as they utilize gates to control the flow of information through the cell. Some of their main uses include machine translation, music generation, and text prediction.

- Variational Autoencoders (VAEs)

With the addition of probabilistic elements, a VAE extends the traditional autoencoder and can, therefore, generate new data samples. They find their use in generative modeling of images and text.

- Generative Adversarial Networks (GANs)

GANs work by pitting two networks, a generator and a discriminator, against each other to create realistic data. They are known for producing high-quality images, deepfakes, and art.

- Transformers

Transformers use self-attention to study sequences in parallel, making them excellent models in natural language processing. Models like BERT, GPT, and T5 use the Transformer as their backbone.

- Graph Neural Networks (GNNs)

GNNs operate on graph-structured data; for example: social networks, or molecular structures. They learn representations by aggregating information from neighboring nodes-and are powerful for relational reasoning.

- Autoencoders

These are unsupervised models that learn to compress and then reconstruct data. Autoencoders are also used for dimensionality reduction, anomaly detection, and image denoising.

- Deep Belief Networks (DBNs)

DBNs are networks with multiple layers of restricted Boltzmann machines. They are used for unsupervised feature learning and pretraining of deep networks, which are then fine-tuned with supervised learning.

Conclusion:

Deep learning architectures are the backbone of modern AI systems. Each type, be it a simple feedforward network or an advanced transformer, possesses unique strengths suited to particular applications. With the continuing evolution of deep learning, hybrid architectures and efficient models are poised to spark breakthroughs in healthcare, autonomous systems, and generative AI.