Scientists from the Division of Sustainable Energy and Environmental Engineering at Osaka University employed deep learning artificial intelligence to improve mobile mixed reality generation.

They found that occluding objects recognized by the algorithm could be dynamically removed using a video game engine. This work may lead to a revolution in green architecture and city revitalization.

Mixed reality (MR) is a type of visual augmentation in which real-time images of existing objects or landscapes can be digitally altered. As anyone who has played Pokémon Go! or similar games knows, looking at a smartphone screen can feel almost like magic when characters appear alongside real landmarks.

This approach can be applied for more serious undertakings as well, such as visualizing what a new building will look like once the existing structure is removed and trees added. However, this kind of digital erasure was thought to be too computationally intensive to generate in real-time on a mobile device.

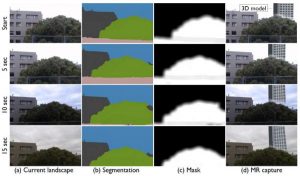

Now, researchers at Osaka University have demonstrated a new system that can construct an MR landscape visualization faster with the help of deep learning. The key is to train the algorithm with thousands of labelled images so that it can more quickly identify occlusions, like walls and fences.

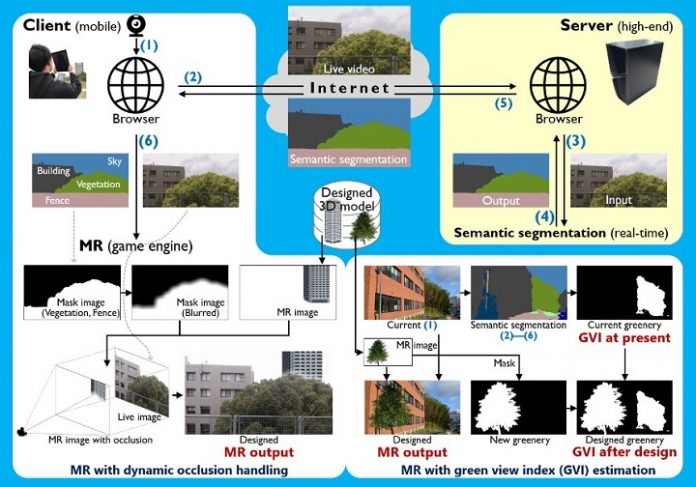

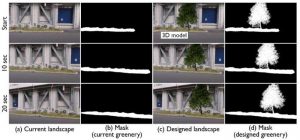

This allows for the automatic “semantic segmentation” of the view into elements to be kept and others to be masked. The program also quantitatively measured the Green View Index (GVI), which is the fraction of greenery areas including plants and trees in a person’s visual field, in either the current or proposed layout. “We were able to implement both dynamic occlusion and Green View Index estimation in our mixed reality viewer,” the corresponding author Tomohiro Fukuda says.

Live video is sent to a semantic segmentation server, and the result is used to render the final view with a game engine on the mobile device. Proposed structures and greenery can be shown even when the viewing angle is changed.

“Internet speed and latency were evaluated to ensure real-time MR rendering,” first author Daiki Kido explains. The team hopes this research will help stakeholders understand the importance of GVI on urban planning.