Jon Gabay, Mouser Electronics

Artificial intelligence (AI) is infiltrating nearly every industry and discipline. From applications we use like Google Maps to factory automation, and parallel parking cars, AI is here, and it’s not going away.

AI may become the new norm for systems we interact with and depend on. That means it will also be the norm for systems designers who want to make modern and competitive products. But AI poses design challenges to engineers classically trained in analog and digital logic design. Fortunately, there are devices, libraries, education, and development kits to help accelerate the learning curve.

Divergence of Design Techniques and Terminologies

While classically trained design engineers use a top-down approach to architecting systems, the AI design approach can be looked at using a double-diamond method where goals and objectives are defined in the first diamond (Discover and Define). Idea generation and arriving at solutions that work can be part of the second diamond (Develop and Deliver).

The first task is to identify the type of AI to be designed. AI Narrow Intelligence learns and carries out particular tasks. AI General Intelligence is the future of AI. Machines can think, reason, learn, and act independently.

Machine learning is a subset of AI that allows machines to learn instead of being instructed. Google defines machine learning as a subfield of artificial intelligence that comprises techniques and methods to develop AI by getting computer programs to do something without programming super-specific rules. As a result, AI based on digital and software-based approaches to AI can be looked at as a set of statistical algorithms finding patterns in massive amounts of data, then using that data to make predictions. Advertisers and service providers use this technique to suggest what to watch or what you may be interested in buying.

Types of AI Learning

Machine learning aims to create solutions that maintain a high level of accuracy. So far, finding and applying patterns follows four ways of learning: Supervised, Unsupervised, Reinforced, and Deep learning.

Supervised Learning: With supervised learning, a machine provides labeled data to tell the device what it should seek. Then, the model trains itself to recognize patterns in unlabeled and untrained data sets. This takes advantage of classification algorithms and regression algorithms that predict output values based on input data features to build a model on feature training. As a designer, standard digital hardware and processors can be coded to run heuristic algorithms that solve these problems.

Unsupervised Learning: Unsupervised learning doesn’t label input data. The machine simply looks for all patterns in the data set. This requires fewer human resources but can take longer for results that maintain the desired accuracy levels. But it can also discover new and unexpected things. Again, standard hardware and coding techniques can be used to implement the clustering and dimension Reduction algorithms that are commonly used for unsupervised learning machines.

Reinforced Learning: An exciting approach to machine learning is Reinforced Learning. No learning data is provided to seed the learning process. Instead, processor algorithms are thrown into the fire to learn by trial and error. Like physiological behavior modification techniques, a system of rewards and penalties is used to steer its development toward desired behaviors.

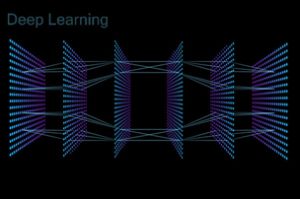

Deep learning: Deep learning is perhaps the best tool for AI and the one that most often gets the spotlight. It is different from the other three approaches in that it uses a complex structure of neural networks. Neural networks use multiple processing layers to extract higher and higher-level features and trends. Each layer is called a neuron, a network connection point that decides whether to block or pass a signal to the next higher level (Figure 1).

Figure 1: Layers of neural networks present higher and higher levels of learned extraction. (Source: AndS – stock.adobe.com)

Deep learning imitates the way humans gain certain types of knowledge. It shines with vast amounts of sequential data and is useful for the most advanced challenges like facial recognition, medical diagnosis, language translations, and self-driving and navigating vehicles. McKinsey Analytics, found that deep learning has 41 percent, 27 percent, and 25 percent reduced error rates1 with complex tasks like image classification, facial recognition, and voice recognition, respectively.

Mastering a New Type of Logic

Instead of logic gates that look for AND, OR, and NOT functionality, neural networks can be seen as a logic system that uses majority and minority gates. A majority gate will create an active output when most of the inputs are at an operational level. A minority gate will exhibit an active state when a minority of the inputs are active.

A neural clump of these types of logic elements can be layered to detect and filter patterns in large data sets. It is said that deep learning functions like a human brain that takes in a vast amount of sensory information and processes and filters it into a real-time usable form.

Supervised, unsupervised, and reinforced learning systems can be implemented using standard digital circuit elements but require bottomless pools of memory and storage as well as high-speed communications in and out. But deep learning presents a problem with this approach. The problem is that designing highly complex systems at a gate level is not a time and cost-effective way of implementing exponentially more complex systems. Multiple processor cores can create a virtually simulated majority and minority-based multi-level network, but any simulated systems will always be much slower than a true hardware-based approach. An actual deep learning machine will need highly dense, flexible, and agile neural network chips and systems.

On the Forefront

Many are working on this with different approaches. In one case, thin film arrays of read-only resistive synapses are being developed to be a programmed network that can contain pre-captured data and knowledge. Another approach is an array of programmable synapses and amplifiers serving as electric neurons.

IC makers like Intel, Qualcomm, NVidia, Samsung, AMD, Xilinx, IBM, STM Micro, NXP, Media Tek, Hi Silicon, Lightspeeur, and Rockchip all offer or plan to provide highly sophisticated neural network chips, IP, and development systems.

Both digital multicore approaches and neural synaptic multi-level architectures are being used. There are even digital self-learning chips like the Loihi 2 from Intel, which calls itself a neuromorphic device and is touted to be the world’s first-of-its-kind self-learning chip. Nvidia is building bigger and faster GPU clusters called GPX-2, which uses non-volatile switches touted at delivering two petaflops of performance.

A detailed description of all AI and deep learning devices and technologies is beyond the scope of this introductory article, but there are ways to get started right away. Development kits from distributors like Mouser offer single-board computers and modules that are supported by various AI IPs and libraries that use some of the AI and deep learning technology mentioned. For example, the Speed 110991205 using the Rockchip RK399 is digital multicore approaches with support for standard digital I/O and device interfaces, and application demonstration and example code.

An advanced solution is from IBM with its True North Neuromorphic ASIC based on the DARPA SyNAPSE program. It contains 4096 cores and implements 268 million programmable synapsis using 5.4 billion transistors. Impressive is its ultra-low power of 70mW, which IBM claims is 1/100,000 the power density of conventional processors.

Conclusion

Partnerships being forged, like STMicroelectronics adoption of Google’s TensorFlow machine learning libraries, are poised to provide rapid development, training, and deployment of AI-based learning machines. These will rapidly become a bigger part of our lives. Similarly, IBM partnered with NVidia POWER9 to provide GPU and FPGA-based accelerators that support OpenCAPI 3.0 and PCI-Express 4.0 to position itself to work with very high-speed hoses to transfer data in and out.

We are already using AI, sometimes without even realizing it. The GPS apps that dynamically route us around traffic obstacles are one example. Those suggestive ads and suggestions for what to watch or listen to are yet another example. Self-driving and parallel parking cars are yet another example. When seamlessly integrated into our lifestyles, it may be a beneficial way to serve man, as long as it is not creating a cookbook.