STM32Cube.AI v7.2, released recently, brings support for deeply quantized neural networks, thus enabling more accurate machine learning applications on existing microcontrollers. Launched in 2019, STM32Cube.AI converts neural networks into optimized code for STM32 MCUs. The solution relies on STM32CubeMX, which assists developers in initializing STM32 devices. STM32Cube.AI also uses X-CUBE-AI, a software package containing libraries to convert pre-trained neural networks. Developers can use our Getting Started Guide to start working with X-CUBE-AI from within STM32CubeMX and try the new feature. The added support for deeply quantized neural networks already found its way into a people-counting application created with Schneider Electric.

STM32Cube.AI: From Research to Real-World Software

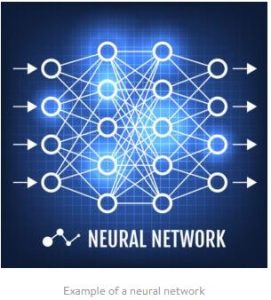

In its simplest form, a neural network is simply a series of layers. There’s an input layer, an output layer, and one or more hidden layers in between the two. Hence, deep learning refers to a neural network with more than three layers, the word “deep” pointing to multiple intermediate layers. Each layer contains nodes, and each node is interconnected with one or more nodes in the lower layer. Hence, in a nutshell, information enters the neural by the input layer, travels through the hidden layers, and comes out of one of the output nodes.

What Are a Quantized Neural Network and a Binarized Neural Network?

To determine how information travels through the network, developers use weights and biases, parameters inside a node that will influence the data as it moves through the network. Weights are coefficients. The more intricate the weight, the more accurate a network but the more computationally intensive it becomes. Each node also uses an activation function to determine how to transform the input value. Hence, to improve performance, developers can use quantized neural networks, which use lower precision weights. The most efficient quantized neural network would be a binarized neural network (BNN), which only uses two values as weight and activation: +1 and -1. As a result, a BNN demands very little computational power but is also the least accurate.

Why Deeply Quantized Neural Networks Matter?

The industry’s challenge was to find a way to simplify neural networks to run inference operations on microcontrollers without sacrificing accuracy to the point of making the network useless. To solve this, researchers from ST and the University of Salerno in Italy worked on deeply quantized neural networks. DQNNs only use small weights (from 1 bit to 8 bits) and can contain hybrid structures with only some binarized layers while others use a higher bit-width floating-point quantizer. The research paper by ST and the university researchers showed which hybrid structure could offer the best result while achieving the lowest RAM and ROM footprint.

The new version of STM32Cube.AI is a direct result of those research efforts. Indeed, version 7.2 supports deeply quantized neural networks to benefit from the efficiency of binarized layers without destroying accuracy. Developers can use frameworks from QKeras or Larq, among others, to pre-train their network before processing it through X-CUBE-AI. Moving to a DQNN will help save memory usage, thus enabling engineers to choose more cost-effective devices or use one microcontroller for the entire system instead of multiple components. STM32Cube.AI thus continues to bring more powerful inference capabilities to edge computing platforms.

From a Demo Application to Market Trends

How Makes a People Counting Demo?

ST and Schneider Electric collaborated on a recent people counting application that took advantage of a DQNN. The system ran inference on an STM32H7 by processing thermal sensor images to determine if people crossed an imaginary line and in which direction to decide if they were entering or leaving. The choice of components is remarkable because it promoted a relatively low bill of material. Instead of moving to a more expensive processor, Schneider used a deeply quantized neural network to significantly reduce its memory and CPU usage, thus shrinking the application’s footprint and opening the door to a more cost-effective solution. Both companies showcased the demo during the TinyML conference last March 2022.

How to Overcome the Hype of Machine Learning at the Edge?

ST was the first MCU manufacturer to provide a solution like STM32Cube.AI, and our tool’s performance continues to rank high, according to MLCommons benchmarks. As this latest journey from an academic paper to a software release shows, the reason behind our performance is that we prioritize meaningful research that impacts real-world applications. It’s about making AI practical and accessible instead of a buzzword. Market analysts from Gartner anticipate that companies working on Embedded AI will soon be going through a “trough of disillusionment.” Today’s announcement and the demo application with Schneider show that by being first and driven by research, ST overcame this slope by being at the centre of practical applications and thoughtful optimizations.

For more information visit, blog.st.com/stm32cubeai-v72