How Qualcomm AI Research is optimizing hardware-specific compilers and chip design with AI

Combinatorial problems are all around us. When we are faced with many choices and we need to find an optimal solution, it is often the case that we are dealing with a combinatorial problem. Some examples of this: airlines need to plan the optimal routes in their networks and companies need to manage their supply chain to properly serve their customers at an optimized cost. Finally, players of the game Go need to strategically plan their moves to cover more territory on the board. The number of potential solutions in Go is remarkable: ~10170.

Combinatorial optimization problems are all around us.

In computer science, there is a popular combinatorial problem that fascinates students and professors alike: the Traveling Salesman Problem. Given a set of N cities with travel distance between each pair, find the shortest path that visits each city exactly once. The full enumeration quickly becomes infeasible as N grows. Using a brute force search method, if a computer can check a solution in a microsecond, then it would take two microseconds to solve three cities, 3.6 seconds for 11 cities, and 3857 years for 20 cities. Thankfully, we have more scalable methods than brute force available: heuristic methods and exact solver methods. Even so, neither of these “traditional” methods fully scales to large problems. They also do not incorporate knowledge from previous problem solving. That is where artificial intelligence (AI) can help.

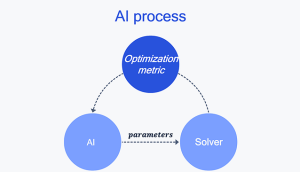

Develop an AI algorithm that can learn correlation between design parameters and optimization metrics from a limited set of problem instances.

AI leverages learned problem structure, scales to larger instances, offers a general framework, and provides speed/cost/resource benefits. Below, I will focus on two important use cases for Qualcomm: chip design and hardware-specific compilers.

Combinatorial optimization with AI for chip design

Optimizing chip design is important because it needs to account for all business metrics, whether in production cost reduction, power-performance optimization, design efficiency, or capital expenditure. The future of chip design faces a challenge as the advantages of Moore’s Law are slowing down. Design is a long and iterative process. We see that iterative optimization takes several days or weeks to meet Power/Performance/Area (PPA) requirements, and tools do not always converge without manual intervention. The challenges in chip placement are complex:

- Placing standard cells and macros (memories) optimally

- Satisfying the design constraints

- Evaluating PPA efficiently

- It is a huge combinatorial search problem

It turns out that AI can help with macro placement. That is why we have channelled our research efforts toward it.

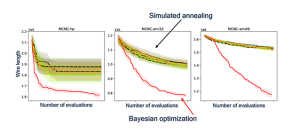

If the macro placement were on one line it would constitute a simpler permutation problem, but the actual problem is two-dimensional. Specifying a macro placement requires choosing two permutations, and so pairs of permutations are the search space. For example, there are (50!)2 possible placements of 50 macros, and we need an efficient search of this large macro placement space. Bayesian optimization proves to be a good method to achieve this. The goal of Bayesian optimization is to find the minimum of a function that is expensive to determine. In the case of chip design, Bayesian optimization learns a surrogate function of the PPA quality metric, which maps each macro placement to an estimate of this metric and uses it to efficiently search over the giant macro placement space. As a result, our model outperforms the standard solver. Overall, data-driven AI optimization can guide algorithmic optimization efficiently toward a solution.

Bayesian optimization can converge faster.

Combinatorial optimization with AI for hardware-specific compilers

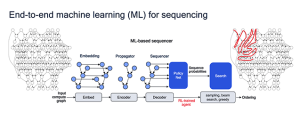

The AI compiler converts an input neural net into an efficient program that runs on target hardware, while optimizing for latency, performance, and power. A compiler consists of tiling, placement, sequencing, and scheduling steps of a computation graph. While important decisions are made in each of these steps, in this work we focus on the node sequencing problem of a graph, which determines the complete order of node processing.

Why is finding an optimal sequence challenging? First, the runtime on the target device is expensive to evaluate — it can take minutes to set up the compiler with needed decisions and run one computation graph. Secondly, there is quite a large decision space, as computation graphs can have many thousands of nodes N, and there are N! possible sequences in such a graph. The goal of our research is to find the optimal node sequence that leads to the best on-target performance.

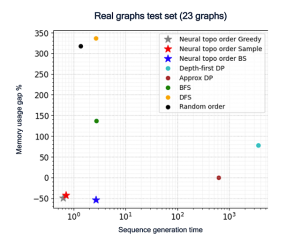

In our paper “Neural Topological Ordering for Computation Graphs” (NeurIPS 2022), we focus on sequencing computation graphs to minimize memory usage, which is also a good proxy for runtime latency. The input is a computation graph for a neural net (with up to tens of thousands of nodes). Our objective is to find a sequence of nodes that minimizes peak memory usage. The application is reducing the inference time of AI models on chips by minimizing access to external memory. It turns out that this can be achieved through end-to-end machine learning for sequencing with reinforcement learning used to train a Graph Neural Network encoder-decoder architecture.

This policy will then be able to generate good sequences for new unseen input graphs.

We used a combination of real and synthetic graphs for training, thus mitigating the data scarcity challenge. We also released the algorithm for creating the synthetic graphs in the same paper. The Neural Topological Order model performs better and is much faster than classical methods, as you can see below. The results are achieved on a real graph test set.

Our model generalizes well and beats baselines comprehensively.

I am excited by the state-of-the-art results Qualcomm AI Research has achieved in combinatorial optimization for chip design and AI compilers. Since combinatorial problems are all around us and even slightly more optimal solutions provide huge benefits, we look forward to scaling combinatorial optimization with AI even further to other technology areas and across industries.