A large misconception is that test data is purely pass/fail, but this could not be further from the truth. Even though traditional, fixed-functionality instruments only send the results back to the host PC of a test system, they are hiding a lot of signal processing under their plastic shells. The processor inside your instrument determines the speed of your measurement. This is especially true for signal processing intensive measurements for applications such as RF, sound and vibration, and waveform-based oscilloscopes.

For example, even the fastest FFT-based spectrum analyzers on the market still spend only 20 percent of their measurement time actually acquiring the signal; the remaining 80+ percent is spent processing the signal for the given algorithm. If you apply this to an instrument released five years prior, the breakdown is even worse. The signal processing can end up dominating up to 95 percent of the measurement time. Because investing in a completely new portfolio of instrumentation each year is not an option, using antiquated test equipment to test modern, complex devices is a reality. Most test departments end up with a large performance gap between the processing power of their systems and their true processing needs.

Modular-based test systems feature three main and separate parts: the controller, the chassis, and the instrumentation. The controller works like an industrial PC and contains the CPU of a system. The main benefit of this approach is the ability to replace the CPU with the latest processing technology and keep the remaining components (chassis/backplane and instrumentation) asis in the test system.For most use cases, keeping the instrumentation and upgrading the processing extend the life of a modular test system well beyond that of a traditional instrument.

Both modular and traditional instruments rely on the same advancements in processor technology to increase test speed, but modular systems are much more agile and economical to upgrade than traditional instruments.

Changes in the Processor Market

In 2005, Intel released the first multicore processor, the Intel®Pentium® D processor, to the mainstream market. Accustomed to harnessing the power of ever-increasing processor speed, software developers were forced to consider new parallel programming techniques to continue to reap the benefits of Moore’s law. As spelled out by Geoffrey Moore in his book, Crossing the Chasm, technology adoption follows a bell curve with respect to time and features five progressive participant states:innovators, early adopters, early majority, late majority, and laggards. Certain industries, such as gaming and video rendering, were quick to adopt parallel programming techniques, while other industries have been slower to embrace them.

Unfortunately, automated test engineers fall into the late majority category when it comes to adopting parallel programming techniques. This could be attributed to a multitude of reasons, but perhaps the biggest reason is that they had no incentive to re-architect their software architecture for multi core processors.Until now, most automated test engineers have used technology such as Intel Turbo Boost to increase the speed of a single core on a quad-core processor and reduce the test times of sequential software architectures, but this technology is plateauing. Many factors, such as heat dissipation, are preventing processor speed from increasing at its previous rate. To keep power use down while boosting performance, Intel and other processor manufacturers are turning to many-core technology, as seen in the Intel® Xeon® processor that features eight logical cores. The result is a processor with clock speed similar to the previous processor but an increase in the number of computational cores that can crunch data.

Applications Poised for Many-Core Processors

Certain test application areas are prime candidates for leveraging the power of many-core processor technologies. In The McClean Report 2015, IC Insights researchers examine many aspects of the semiconductor market, including the economics of the business. They state, “For some complex chips, test costs can be as high as half the total cost…longer test times are driving up test times.” They continue to note, “Parallel testing has been and continues to be a big cost-reduction driver…”

Much like research has shown that humans face inefficiencies when multi tasking day-to-day work activities, systems experience overhead when performing tests in parallel. In semiconductor test, test managers use the parallel test efficiency (PTE) of a test system to measure its overhead. If the test software is architected properly, increasing the available processing cores in a test system should positively affect the PTE for a given test routine. Though test managers must consider many factors such as floor space, optimal parts-per-hour throughput, and capital expense when investing in automated test systems, an upgrade to the parallel processing power of the system typically makes a positive impact on the business by improving the PTE.

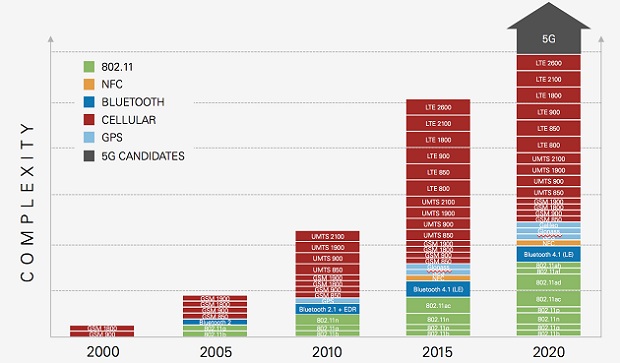

But the semiconductor industry is not alone in adopting parallel test.You would be hard-pressed to find a wireless test system that tests fewer than four devices at a time. Many-core processors are also primed to impact any industry that must test the wireless connectivity or cellular communication protocols of a device. It is no secret that 5G is currently being researched and prototyped, and this technology will well exceed the bandwidth capabilities of current RF instrumentation. In addition to processing all of the data that is sent back from the high-bandwidth signal analyzer for a single protocol, wireless test systems must test multiple protocols in parallel. For example, a smartphone manufacturer needs to test not only 5G when it releases in the near future, but also the majority of the previous cellular technologies as well as Bluetooth, 802.11, and any other connectivity variants. And, generally speaking, each new communication protocol requires test algorithms that are more processor thirsty than its predecessor. Just as many-core processors help increase the PTE of a semiconductor test system, increasing the ratio of processing cores to protocols and devices under test will help reduce test time further.

Application Software

Software has evolved from a minor component to a leading role in test and measurement. With the aforementioned change in the CPU market, test engineers now face the even more daunting challenge of trying to implement parallel test software architectures. When using a general-purpose programming language such as C or C++, properly implementing parallel test techniques often requires man-months of development. When time to market is a priority, test managers and engineers must emphasize developer productivity. Adopting application-specific test code development software(for example,NI LabVIEW) and test management software (for example,NI TestStand) moves the administrative work of parallel processing and thread swapping from test departments to the software R&D staff of the commercial software company.Application-specific software enables test engineers to focus on the code that is critical to testing their devices.

Preparing for the Future

Complexity has risen at an extraordinary pace over the past decade, and it shows no signs of slowing down. The next generation of cellular communication is slotted to arrive in 2020. Gartner estimates that every household in the world will have more than 500 connected devices by 2022, and sensors will most likely be smaller than the diameter of a human hair. The growing consumer expectations for high-quality product user experiences require manufacturers to vigorously design and test their products to be competitive. Test engineers will be called on to test these products and ensure that they function safely and reliably. To economically achieve this, they will need to adopt a modular, software-centric approach that emphasizes parallel testing.Using many-core processing technology is no longer an option;it is now arequirement to stay economically viable. The only remaining question is how test departments will change their software approaches to take advantage of the additional processing cores.

Author Information

Adam Foster

Senior Product Marketing Manager

Automated Test

National Instruments

Shahram Mehraban

Director

Industrial and Energy Solutions

Intel