Advancing from natural to artificial vision

The eye is the result of an evolutionary masterpiece of nature. From the light-sensitive retina to the information-carrying optic nerve to the analyzing brain – natural vision is a highly complex data processing activity that uses low-power neural networking. The intelligent abstraction of what is seen makes it possible for humans and animals to conclude in a fraction of a second what relevance the visible light captured by the eye has for their lives. This masterpiece of natural intelligence took millions of years to evolve. Developers of artificial intelligence (AI) systems need to achieve this feat faster.

Developers of AI-accelerated applications are therefore turning to compact and preconfigured embedded vision kits that combine proven AI hardware and software in an energy-efficient way. Currently, there is particularly high interest in dedicated edge computing solutions. For AI-accelerated systems, this is the neuralgic point for making informed decisions in real time from image information. The detour via cloud-based analysis takes longer and depends on continuous network availability. Whereas at the edge, you’re always at the scene of the action, which makes it possible to autonomously acquire and evaluate visual image data in fractions of a second.

NPU – the heart of embedded vision systems

A neuromorphic processor or neural processing unit (NPU) is indispensable for providing the computing performance required for deep learning and machine learning at the edge. NPUs excel at analyzing images and patterns, making them the central computational unit of AI-accelerated embedded vision systems. Inspired by the architecture of the brain’s neural network, neuromorphic processors are event-driven and only occasionally require power. This means that NPUs consume just a few watts, even for the highest computational and graphic tasks. In fact, such low-power NPUs can achieve performances of several tera operations per second (TOPS), which meets the edge computing requirements of embedded systems development.

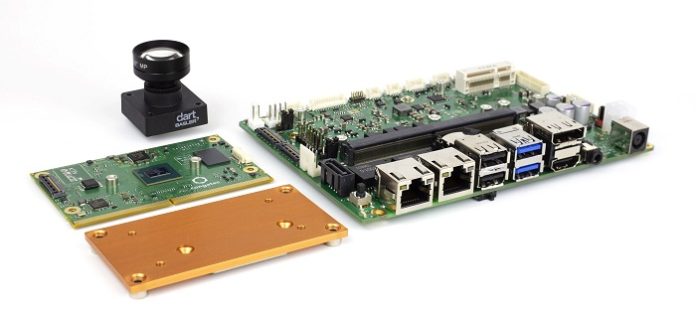

Customized starter set for the edge

Semiconductor manufacturer NXP has equipped its i.MX 8M Plus processor with such an NPU to make it fit for machine learning. Together with four Arm Cortex-A53 cores and an Arm Cortex-M7 controller, the unit achieves up to 2.3 TOPS. In addition, the i.MX 8M Plus has an image signal processor (ISP) for parallel real-time processing of high-resolution images and video. As it allows pre-processing of images already during acquisition, the NPU can deliver even more accurate image results in post-processing. This is not just interesting for high-performance industrial image processing; it is a boon wherever image processing algorithms can be used to produce better visual results. To accelerate the implementation of the NXP processor, congatec has designed and launched a starter set with which developers can quickly and securely bring AI vision to their edge applications. At the heart of the embedded vision kit is a credit card-sized SMARC 2.1 Computer-on-Module (COM) with an i.MX 8M Plus processor. The module can control up to three independent displays, features hardware-accelerated decoding/encoding of video data and provides up to 128 GB of eMMC for data storage. H.265 video compression enables the NPU to send high-resolution camera streams, pre-selected from the two integrated MIPI-CSI interfaces, directly to a central control station. Offering multiple peripheral interfaces, the SMARC module provides access to an extensive ecosystem of AI-accelerated embedded systems and, depending on the configuration, is suitable for industrial use in a temperature range of -40 to +85°C. The module also has a low operating power consumption of just 2 to 6 watts and comes with passive cooling.

Edge solutions must be robust and reliable

Robust and reliable hardware is an absolute must for visual edge computing systems, where the data can’t be processed in a protected and air-conditioned environment like it’s possible with cloud computing. Whether deployed outdoors or in the field, traveling on board a vehicle, or sitting on the manufacturing floor, visual edge computing systems must be resilient. Demand for AI vision comes from visual applications such as ripe fruit detection in agriculture, automated product inspection in manufacturing, access control in building automation, or product recognition in retail shopping carts at the point of sale. Edge-based real-time analysis is superior to human inspection because it works 24 hours a day, seven days a week. The advantages are particularly significant for industrial operations in inhospitable environments. Take monitoring of wind turbines for example or safety-related video surveillance of production processes. As a McKinsey study finds, AI systems can also increase plant utilization and productivity by up to 20% through predictive maintenance. Visual quality monitoring with automatic defect detection can even yield productivity increases of up to 50%. And for the high safety requirements of autonomous driving, edge-based AI solutions are the be-all and end-all to ensure the reliable and safe transport of goods and people.

Leveraging eIQ to customize vision

Given the variety of possible embedded vision applications, it is self-evident that a starter set must allow custom developments. NXP offers the eIQ Machine Learning software development platform for this purpose, where eIQ stands for edge intelligence. It gives AI-accelerated systems developers access to a platform that combines different libraries and development tools adapted to NXP microprocessors and microcontrollers. This includes software-based inference engines that can derive new facts from existing data and insights through reasoning. eIQ supports inference engines and libraries such as Arm Neural Network (NN) and the open-source-based TensorFlow Lite.

The AI-accelerated eye comes from Basler

While the SMARC COM in the starter set is the artificial brain behind the AI eye, a postcard-sized 3.5-inch carrier board serves as the optic nerve. As the central interface for data communication, it uses MIPI CSI-2.0 – in this case, a Basler dart BCON MIPI camera – to connect the “brain” with the “eye”. No additional converter modules are required, and everything an AI-accelerated image processing system needs fits on a minimal footprint. Barely larger than a matchbox and with a lens with a focal length of 4mm, the Basler dart camera can be integrated even in tight spaces. Low power consumption and minimal heat generation make it particularly well suited for use at the edge. On the software side, Basler’s pylon camera software suite provides a uniform SDK that can also be used with other interfaces than MIPI CSI-2.0 and can control industrial cameras with USB 3 or GigE standards. Thanks to the integrated Basler dart camera and software package, the starter set for i.MX 8M Plus processors give developers easy access to core AI-accelerated machine vision features such as triggering, ultra-fast single-frame image delivery, highly differentiated camera configuration options, and custom inference algorithms based on the Arm NN and TensorFlow Lite ecosystems. This gives developers the tools to leverage the full performance of the NPU.

Designed for human-machine interaction

The comprehensive and preconfigured AI vision starter set not only saves time when developing embedded vision systems; it also brings the certainty of relying on established technologies and proven standards. It is the combination of the Arm Cortex-A processors and the NXP NPU that enables edge devices to make intelligent decisions on the spot by learning and drawing appropriate conclusions from visual information with little or no human intervention. The scope of applications for the NPU-based embedded vision starter set goes far beyond people or object recognition. For example, hand gestures and emotion recognition combined with natural language processing make the starter set ideal for interactive communication applications between humans and machines. Ultra-short response times and precise localization help to optimize robotic product assembly or warehouse logistics in industrial manufacturing. Thanks to high safety standards, the starter set is also suitable for applications in sensitive areas such as customer service or healthcare. And finally, the list includes applications in research and science, where the AI-accelerated eye can, for instance, investigate the depths of the oceans, conduct research in the perpetual ice of Antarctica, or observe and analyze in real-time what is happening on the moon, Mars and other planets during space flights. In the case of the latter, sending image data to Earth would take far too long to be able to react in real-time.

Contributing Author :