Courtesy: Samsung

1. Introduction

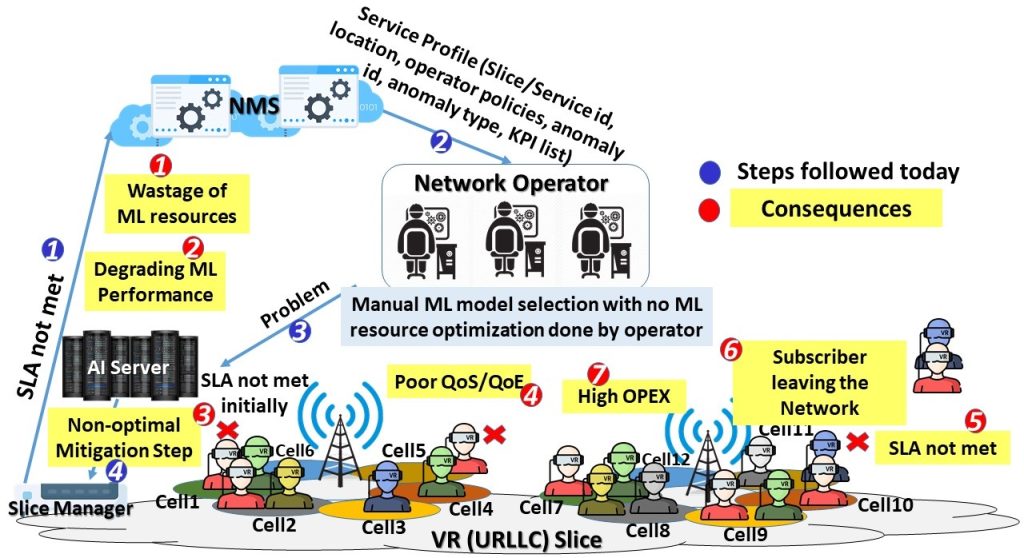

Given the presence of diverse services and devices generating heterogeneous traffic patterns, the careful selection of appropriate ML models and optimal allocation of ML resources based on the current network’s ML resource usage is crucial. However, manual management of these aspects, considering factors such as ML resource availability, specific service requirements, problem type for ML/AI application, ML periodicity (data collection interval and prediction frequency), acceptable error thresholds, and desired model accuracy, poses significant challenges. Manual provisioning can result in inadequate model selection and suboptimal allocation of ML resources, leading to ineffective problem mitigation, degradation of network QoE/QoS, increased OPEX for network operators or subscriber loss. Hence, there is a need to automate the process of ML model selection, related parameters (periodicity, errors, accuracies), and ML resource provisioning. The problem statement is depicted in Figure 1. In 5G networks, where a large number of services and cells generate vast amounts of data, manual management of ML services can lead to suboptimal or inappropriate selection of ML packages. Such suboptimal choices can result in inefficient utilization of ML resources and degrade the performance of ML training and prediction. Consequently, opting for suboptimal or inappropriate mitigation solutions may cause QoS/QoE degradation and violate service level agreements (SLAs). These degradations or SLA violations can lead to subscriber churn as users abandon the network due to poor service quality. Additionally, suboptimal or inappropriate mitigation solutions can increase the operator’s OPEX.

2. Our preposition:

To tackle the challenges mentioned above, we introduce AutoMLPoweredNetworks: an automated solution for Machine Learning (ML) service provisioning in next-generation networks.

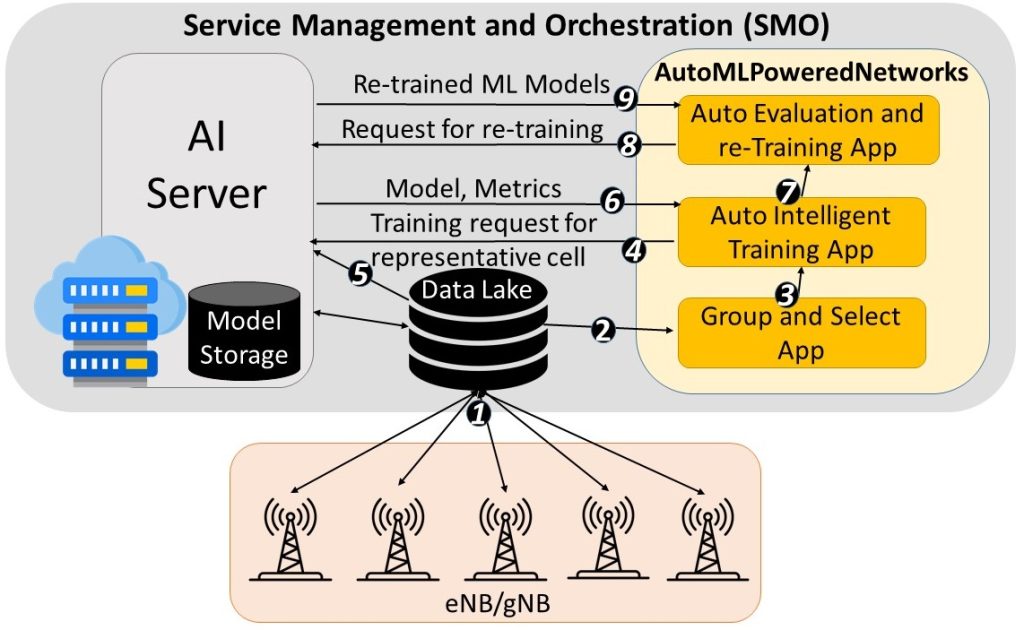

- Whenever an anomaly is detected in the network, SMO collects the data from eNB/gNB for rectifying the anomaly with the help of AI server. The network KPIs are extracted periodically from eNB/gNB and stored in the data lake.

- AutoMLPoweredNetwork framework will optimize the AI/ML jobs before actual AI/ML is applied to the data and a solution is expected from AI server. The network KPIs extracted for different cells are fetched by group and select an application. This application is responsible for running similarity metric selection algorithms on the data and automatically tracing the best available algorithm. The algorithms considered are (DTW, Pcor, Kcor and Scor). Based on the similarity metric algorithm, the application will create groups amongst the cells exhibiting the same characteristics. For each group, the representative cell is either selected randomly or based upon the max order selection where max order is defined as the cell having the maximum number of neighboring cells.

- The output (group ids, respective representative cells, and the similarity algorithm used) will be shared with the auto-intelligent training app.

- The auto-intelligent training app will send the training and prediction request for the representative cells to the AI server and also tells the best available ML model for the representative cells. It will also send the request for training the cells which do not belong to any group.

- AI server will fetch the relevant data from the data lake and train the ML model for the representative cell and store it in the model storage.

- The models and the respective accuracies are sent back to the auto-intelligent training app.

- Auto Intelligent training app will use the fetched model of the representative cell and share it with the auto evaluation and re-training app. Auto evaluation and re-training app will re-use the ML model of representative cells and re-use them to do the prediction in the rest of the cells in the group (other than representative cells). It will then evaluate the prediction accuracies of the cells on which the ML models have been re-used.

- If the evaluated accuracy of any cell in the group is less than a set threshold then it sends a re-training request to the AI server wherein, it will only train certain layers of the model with the less EPOCHS.

- Re-trained models are returned to the auto evaluation and re-training app.

Our proposed AutoMLPoweredNetworks helps in saving the ML resources by re-using the ML models of representative cells and using the trained model on the rest of the cells in the group without performing any training for them.

3. Experimental Setup:

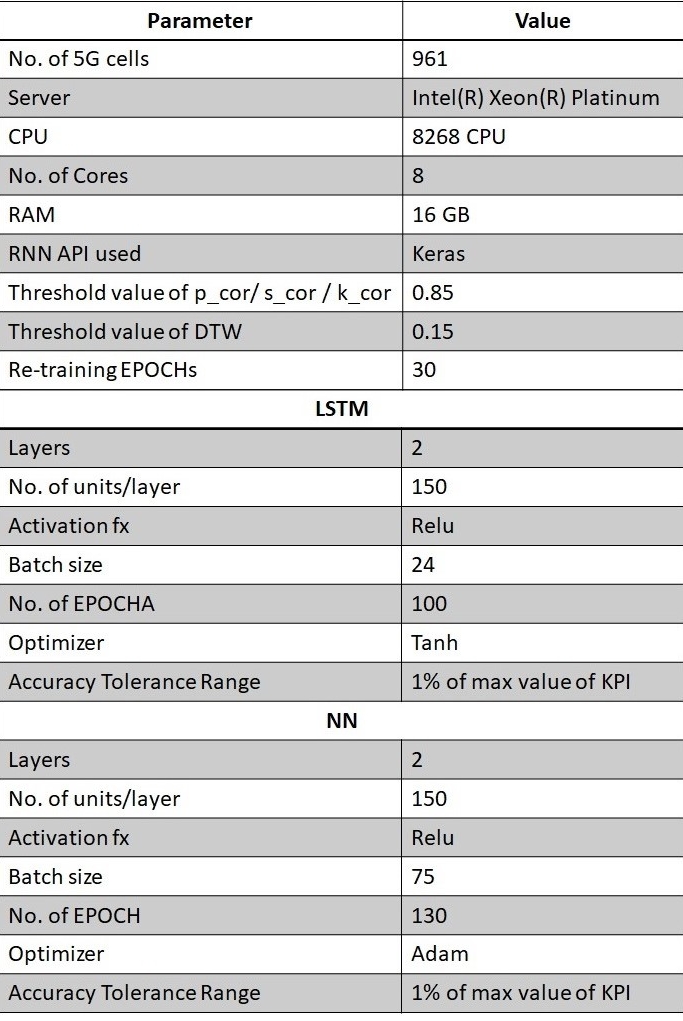

We conducted our experiments using 961 cells from a real-field 5G operator dataset, which included three Network Key Performance Indicators (KPIs): Cell Data Rate, Resource Usage Percentage, and the number of connected UE’s. Datasets consisted of one month’s worth of data collected at hourly intervals. We divided the datasets into an 80-20 split, with 80% for testing and 20% for validation. For our experiments, we employed two deep learning models: LSTM: Long Short-Term Memory model and NN: Neural Networks model. The experiments were performed using an Intel Xeon Platinum 8268 CPU (16 GB RAM and 8 cores). We utilized the Keras RNN API for implementation. The experiment parameters are outlined in Table 1.

4. Experimental Results:

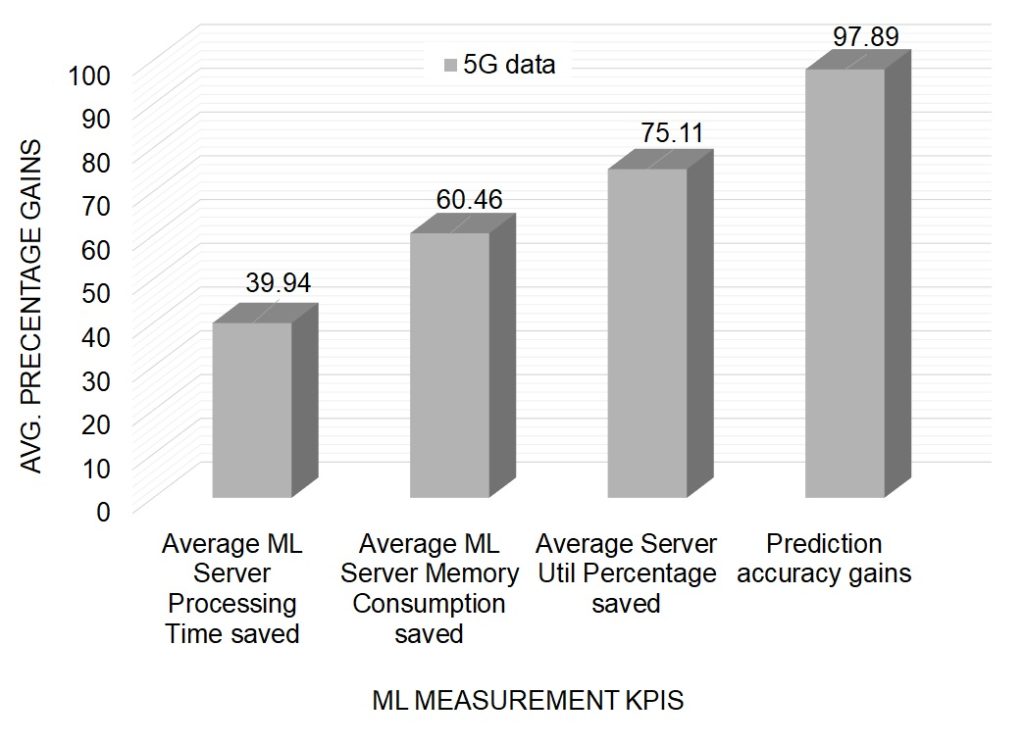

We examined the efficacy of three applications (for 5G data) across various proposed Key Performance Indicators related to Machine Learning.

Figure 3 shows the average gains across different ML measurement KPIs for 5G network data. For 5G data we saved 39.94% of server processing time, 60.46% of server memory consumption and 75.11% of server Utilization on an average across different network KPIs. For Resource Usage Percentage KPI we saw that for 5G data, there were 97.89% of cell which performed well in terms of training accuracies when we trained the similar cells using training model of the representative cell in a group.

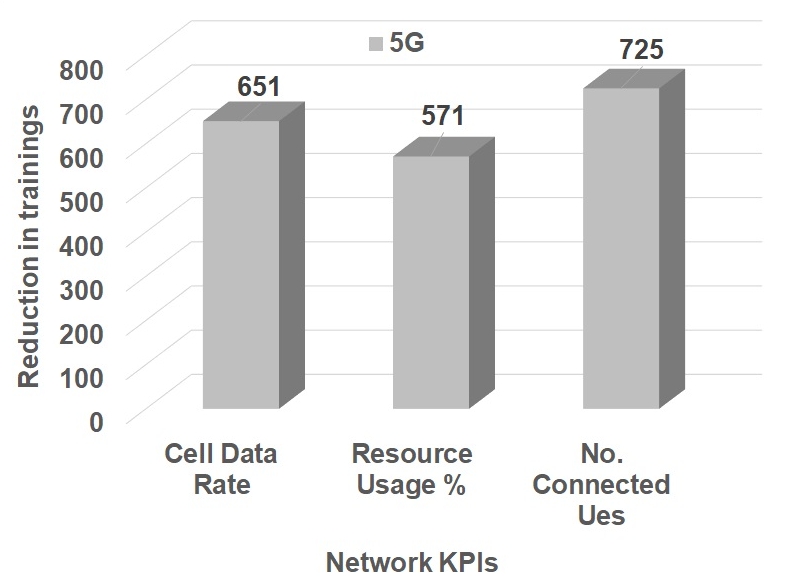

Figure 4 depicts the savings in number of training across different KPIs for 5G data. For 5G data, we saved 651 trainings for Cell Data-Rate network KPI, 571 training for Resource Usage Percentage network KPI and 725 trainings for the number of connected UE’s network KPI. On an average, we saved 649 trainings across all the network KPIs.

5. Summary and Way Forward

This research paper introduces a framework that addresses the automatic selection of ML models and optimization of ML resources. To evaluate the framework, we conducted tests using actual 5G operator data, and the results showcased significant improvements based on the proposed ML measurement KPIs.

In terms of future work, we aim to enhance the cell grouping algorithms by including more parameters. We intend to automate the process of automatically learning from the evaluation application and deciding the re-training layers dynamically rather than fixed re-training EPOCHS. We further plan to enhance the representative cell selection algorithm by including more similarity metric algorithms.